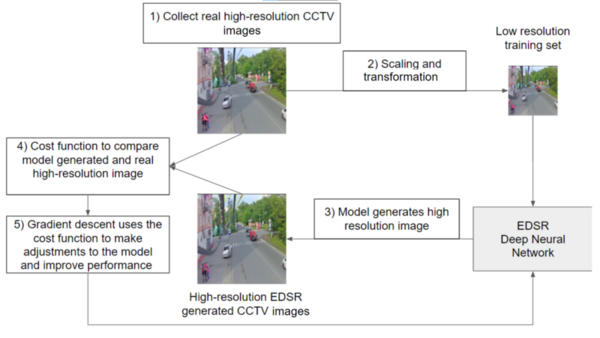

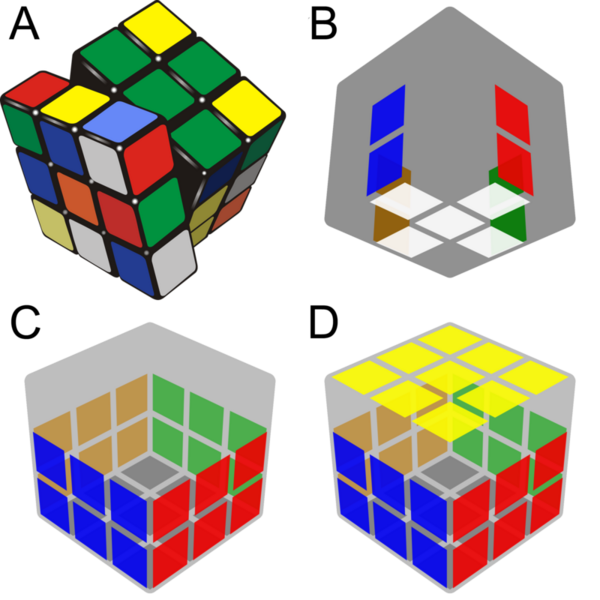

In this study, the authors hypothesized that closed-circuit television images could be stored with improved resolution by using enhanced deep residual (EDSR) networks.

Read More...Deep residual neural networks for increasing the resolution of CCTV images

In this study, the authors hypothesized that closed-circuit television images could be stored with improved resolution by using enhanced deep residual (EDSR) networks.

Read More...An analysis of the feasibility of SARIMAX-GARCH through load forecasting

The authors found that SARIMAX-GARCH is more accurate than SARIMAX for load forecasting with respect to energy consumption.

Read More...The effect of COVID-19 on the USA house market

COVID-19 has impacted the way many people go about their daily lives, but what are the main factors driving the changes in the housing market, particular house prices?

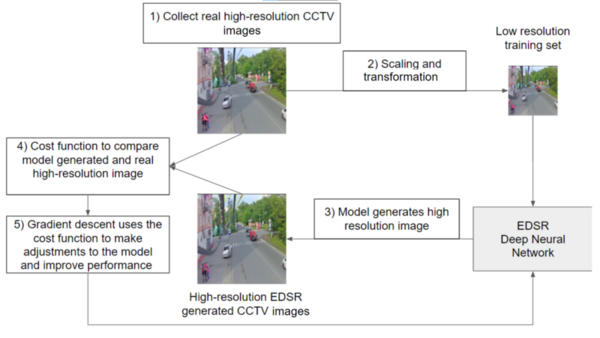

Read More...Inflated scores on the online exams during the COVID-19 pandemic school lockdown

In this study, the authors explored whether students' test scores were significantly higher on online exams during the COVID-19 school lockdown when compared to those of the in-person exams before the lockdown.

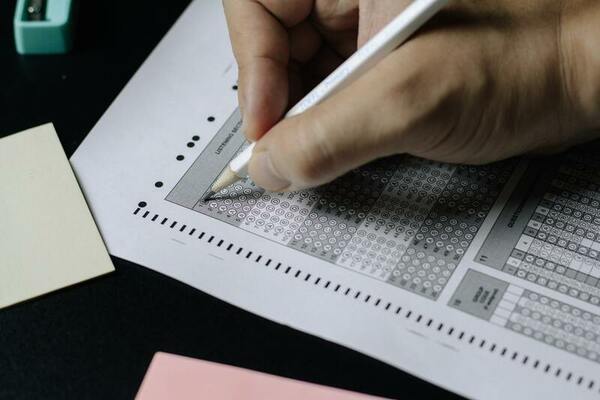

Read More...Rubik’s cube: What separates the fastest solvers from the rest?

In this study, the authors assess the factors that allow some speedcubers to solve Rubik's Cubes faster than others.

Read More...Constructing an equally weighted stock portfolio based on systematic risk (beta)

In this article, the authors investigate whether stock selection across various sectors is efficient enough to outperform an overall market. Stocks from 2006 to 2020 were selected across sectors to calculate beta values using the Capital Asset Pricing Model.

Read More...Similarity Graph-Based Semi-supervised Methods for Multiclass Data Classification

The purpose of the study was to determine whether graph-based machine learning techniques, which have increased prevalence in the last few years, can accurately classify data into one of many clusters, while requiring less labeled training data and parameter tuning as opposed to traditional machine learning algorithms. The results determined that the accuracy of graph-based and traditional classification algorithms depends directly upon the number of features of each dataset, the number of classes in each dataset, and the amount of labeled training data used.

Read More...Open Source RNN designed for text generation is capable of composing music similar to Baroque composers

Recurrent neural networks (RNNs) are useful for text generation since they can generate outputs in the context of previous ones. Baroque music and language are similar, as every word or note exists in context with others, and they both follow strict rules. The authors hypothesized that if we represent music in a text format, an RNN designed to generate language could train on it and create music structurally similar to Bach’s. They found that the music generated by our RNN shared a similar structure with Bach’s music in the input dataset, while Bachbot’s outputs are significantly different from this experiment’s outputs and thus are less similar to Bach’s repertoire compared to our algorithm.

Read More...Statistically Analyzing the Effect of Various Factors on the Absorbency of Paper Towels

In this study, the authors investigate just how effectively paper towels can absorb different types of liquid and whether changing the properties of the towel (such as folding it) affects absorbance. Using variables of either different liquid types or the folded state of the paper towels, they used thorough approaches to make some important and very useful conclusions about optimal ways to use paper towels. This has important implications as we as a society continue to use more and more paper towels.

Read More...Geographic Distribution of Scripps National Spelling Bee Spellers Resembles Geographic Distribution of Child Population in US States upon Implementation of the RSVBee “Wildcard” Program

The Scripps National Spelling Bee (SNSB) is an iconic academic competition for United States (US) schoolchildren, held annually since 1925. However, the sizes and geographic distributions of sponsored regions are uneven. One state may send more than twice as many spellers as another state, despite similar numbers in child population. In 2018, the SNSB introduced a wildcard program known as RSVBee, which allowed students to apply to compete as a national finalist, even if they did not win their regional spelling bee. In this study, the authors tested the hypothesis that the geographic distribution of SNSB national finalists more closely matched the child population of the US after RSVBee was implemented.

Read More...Search articles by title, author name, or tags