The authors looked the ability of sound sensors to predict clogged pipes when the sound intensity data is run through a machine learning algorithm.

Read More...Predicting clogs in water pipelines using sound sensors and machine learning linear regression

The authors looked the ability of sound sensors to predict clogged pipes when the sound intensity data is run through a machine learning algorithm.

Read More...Predicting and explaining illicit financial flows in developing countries: A machine learning approach

The authors looked at the ability of different machine learning algorithms to predict the level of financial corruption in different countries.

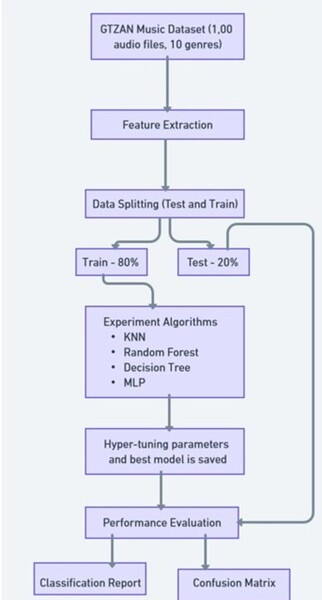

Read More...Impact of length of audio on music classification with deep learning

The authors looked at how the length of an audio clip used of a song impacted the ability to properly classify it by musical genre.

Read More...Machine learning-based enzyme engineering of PETase for improved efficiency in plastic degradation

Here, recognizing the recognizing the growing threat of non-biodegradable plastic waste, the authors investigated the ability to use a modified enzyme identified in bacteria to decompose polyethylene terephthalate (PET). They used simulations to screen and identify an optimized enzyme based on machine learning models. Ultimately, they identified a potential mutant PETases capable of decomposing PET with improved thermal stability.

Read More...Deep dive into predicting insurance premiums using machine learning

The authors looked at different factors, such as age, pre-existing conditions, and geographic region, and their ability to predict what an individual's health insurance premium would be.

Read More...Can Green Tea Alleviate the Effects of Stress Related to Learning and Long-Term Memory in the Great Pond Snail (Lymnaea stagnalis)?

Stress and anxiety have become more prevalent issues in recent years with teenagers especially at risk. Recent studies show that experiencing stress while learning can impair brain-cell communication thus negatively impacting learning. Green tea is believed to have the opposite effect, aiding in learning and memory retention. In this study, the authors used Lymnaea stagnalis , a pond snail, to explore the relationship between green tea and a stressor that impairs memory formation to determine the effects of both green tea and stress on the snails’ ability to learn, form, and retain memories. Using a conditioned taste aversion (CTA) assay, where snails are exposed to a sweet substance followed by a bitter taste with the number of biting responses being recorded, the authors found that stress was shown to be harmful to snail learning and memory for short-term, intermediate, and long-term memory.

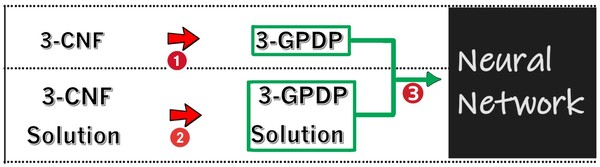

Read More...Solving a new NP-Complete problem that resembles image pattern recognition using deep learning

In this study, the authors tested the ability and accuracy of a neural net to identify patterns in complex number matrices.

Read More...Artificial Intelligence Networks Towards Learning Without Forgetting

In their paper, Kreiman et al. examined what it takes for an artificial neural network to be able to perform well on a new task without forgetting its previous knowledge. By comparing methods that stop task forgetting, they found that longer training times and maintenance of the most important connections in a particular task while training on a new one helped the neural network maintain its performance on both tasks. The authors hope that this proof-of-principle research will someday contribute to artificial intelligence that better mimics natural human intelligence.

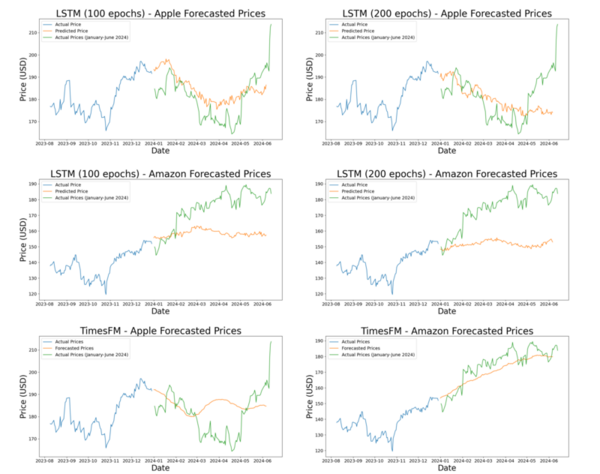

Read More...Stock price prediction: Long short-term memory vs. Autoformer and time series foundation model

The authors looked the ability to predict future stock prices using various machine learning models.

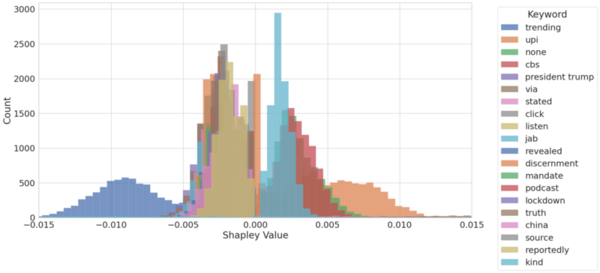

Read More...An explainable model for content moderation

The authors looked at the ability of machine learning algorithms to interpret language given their increasing use in moderating content on social media. Using an explainable model they were able to achieve 81% accuracy in detecting fake vs. real news based on language of posts alone.

Read More...