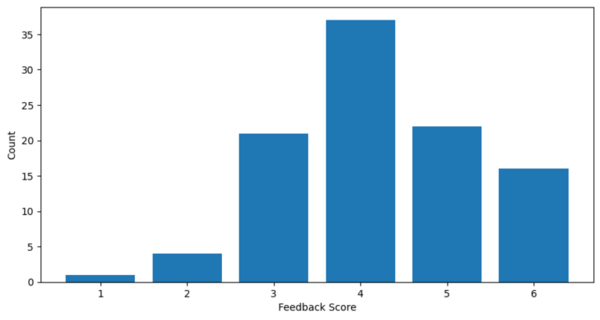

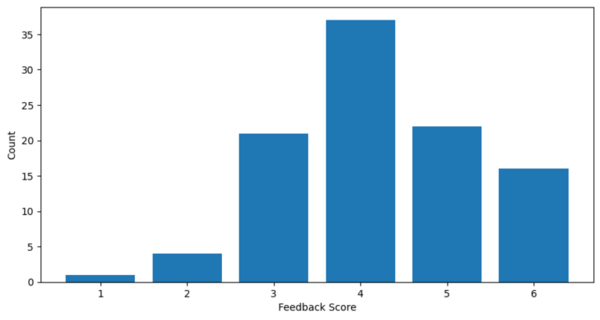

Authors examine the effectiveness of Large Language Models (LLMs) like BERT, MathBERT, and OpenAI GPT-3.5 in assisting middle school students with math word problems, particularly following the decline in math performance post-COVID-19.

Read More...Assessing large language models for math tutoring effectiveness

Authors examine the effectiveness of Large Language Models (LLMs) like BERT, MathBERT, and OpenAI GPT-3.5 in assisting middle school students with math word problems, particularly following the decline in math performance post-COVID-19.

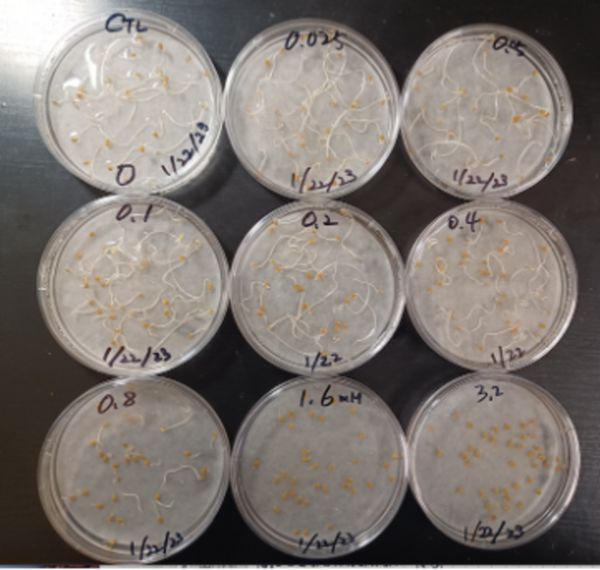

Read More...Lettuce seed germination in the presence of microplastic contamination

Microplastic pollution is a pressing environmental issue, particularly in the context of its potential impacts on ecosystems and human health. In this study, we explored the ability of plants, specifically those cultivated for human consumption, to absorb microplastics from their growing medium. We found no evidence of microplastic absorption in both intact and mechanically damaged roots. This outcome suggests that microplastics larger than 10 μm may not be readily absorbed by the root systems of leafy crops such as lettuce (L. sativa).

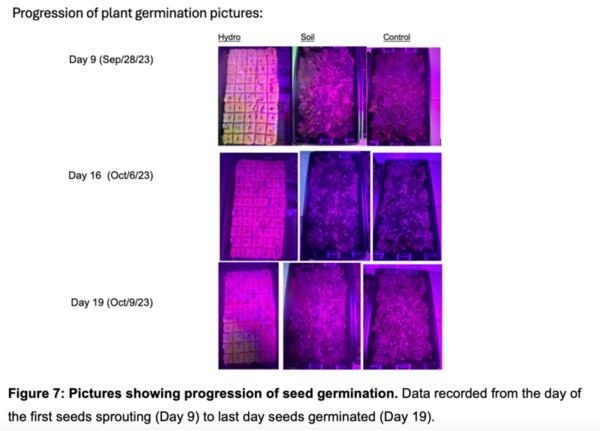

Read More...An exploration of western mosquitofish as the animal component in an aquaponic farming system

Aquaponics (the combination of aquatic plant farming with fish production) is an innovative farming practice, but the fish that are typically used, like tilapia, are expensive and space-consuming to cultivate. Medina and Alvarez explore other options test if mosquitofish are a viable option in the aquaponic cultivation of herbs and microgreens.

Read More...Lactic acid bacteria protect the growth of Solanum lycopersicum from Sodium dodecyl sulfate

Sodium dodecyl sulfate (SDS), a detergent component, can harm plant growth when it contaminates soil and waterways. Authors explored the potential of lactic acid bacteria (LAB) to mitigate SDS-induced stress on plants.

Read More...Efficacy of natural coagulants in reducing water turbidity under future climate change scenarios

Here the authors investigated the effects of natural coagulants on reducing the turbidity of water samples from the Tennessee River Watershed. They found that turbidity reduction was higher at lower temperatures for eggshells. They then projected and mapped turbidity reactions under two climate change scenarios and three future time spans for eggshells. They found site-specific and time-vary turbidity reactions using natural coagulants could be useful for optimal water treatment plans.

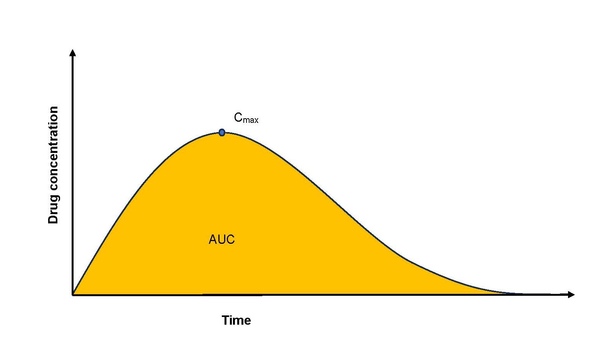

Read More...pH-dependent drug interactions with acid reducing agents

Some cancer treatments lose efficacy when combined with treatments for excessive stomach acid, due to the changes in the stomach environment caused by the stomach acid treatments. Lin and Lin investigate information on oral cancer drugs to see what information is available on interactions of these drugs.

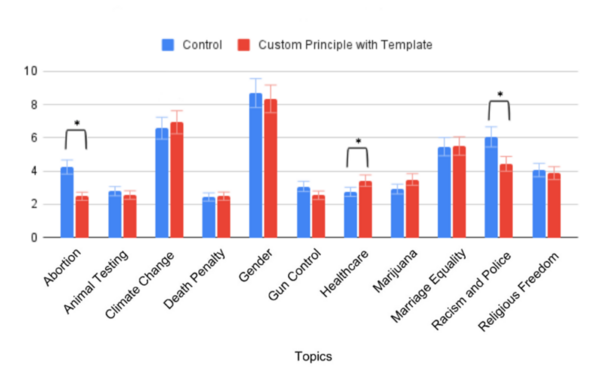

Read More...Unveiling bias in ChatGPT-3.5: Analyzing constitutional AI principles for politically biased responses

Various methods exist to mitigate bias in AI models, including "Constitutional AI," a technique which guides the AI to behave according to a list of rules and principles. Lo, Poosarla, Singhal, Li, Fu, and Mui investigate whether constitutional AI can reduce bias in AI outputs on political topics.

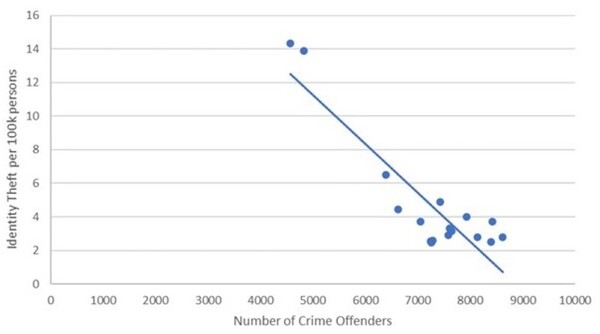

Read More...Understanding the battleground of identity fraud

The authors looked at variables associated with identity fraud in the US. They found that national unemployment rate and online banking usage are among significant variables that explain identity fraud.

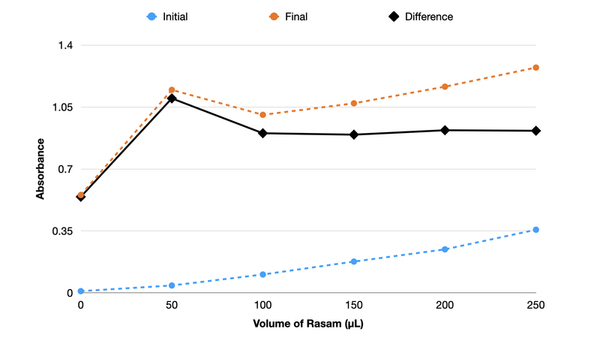

Read More...Antibacterial activity of homemade Indian tomato tamarind soup (rasam) against common pathogens

Systematic consumption of traditional foods is a popular way of treating diseases in India. Rasam, a soup of spices and tomato with a tamarind base, is a home remedy for viral infections such as the common cold. Here, we investigate if rasam, prepared under household conditions, exhibits antibacterial activity against Escherichia coli and Staphylococcus aureus, two common pathogenic bacteria. Our results show rasam prepared under household conditions lacks antibacterial activity despite its ingredients possessing such properties.

Read More...Exploring the effects of diverse historical stock price data on the accuracy of stock price prediction models

Algorithmic trading has been increasingly used by Americans. In this work, we tested whether including the opening, closing, and highest prices in three supervised learning models affected their performance. Indeed, we found that including all three prices decreased the error of the prediction significantly.

Read More...