Unveiling bias in ChatGPT-3.5: Analyzing constitutional AI principles for politically biased responses

(1) Aspiring Scholars Directed Research Program, Evergreen Valley High School, (2) Aspiring Scholars Directed Research Program, Mission San Jose High School, (3) Aspiring Scholars Directed Research Program, Monta Vista High School, (4) Aspiring Scholars Directed Research Program, Basis Independent Silicon Valley, (5) Aspiring Scholars Directed Research Program, Saratoga High School, (6) Aspiring Scholars Directed Research Program, Salesforce AI Research

https://doi.org/10.59720/24-047

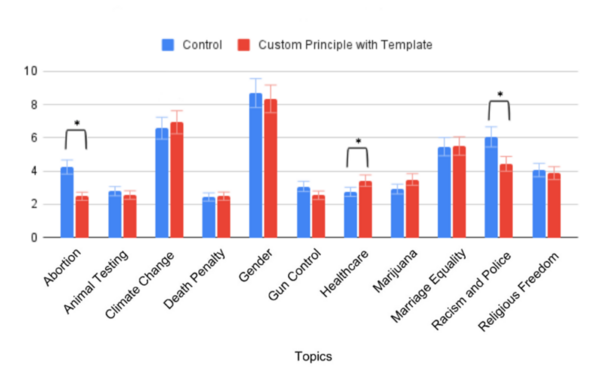

OpenAI's set of GPT models has been applied across a variety of applications in many industries. In a previous study by Sinha, et al. (2023), our group quantified the bias in responses from OpenAI's GPT- 3.0 model across various political subjects. Our results revealed a statistically significant left-leaning political bias in GPT-3.0’s responses for 9 out of the 11 analyzed political topics. In this research, we employed Anthropic's Constitutional artificial intelligence (AI) principles to mitigate GPT-3.5’s political bias. These principles outline the core principles that AI models must follow to ensure harmlessness and helpfulness. We conducted a series of tests by applying custom constitutional principles in an attempt to reduce political bias. We hypothesized that applying Anthropic’s Constitutional AI principles would result in a statistically significant reduction in the politically biased responses generated by ChatGPT. Our observations indicated a significant reduction in bias for the “abortion” and “racism and police” topics when using our custom principle with a tailored prompt template. For the other topics, surprisingly, our study did not uncover significant bias reduction in ChatGPT’s responses. This suggests that while constitutional principles can effectively mitigate bias in certain areas, their application across a broader range of topics requires further refinement and research to achieve consistent results.

This article has been tagged with: