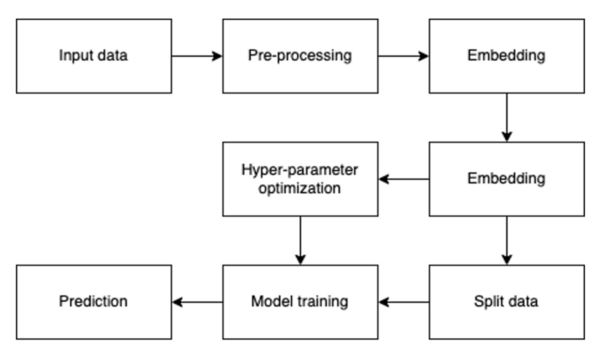

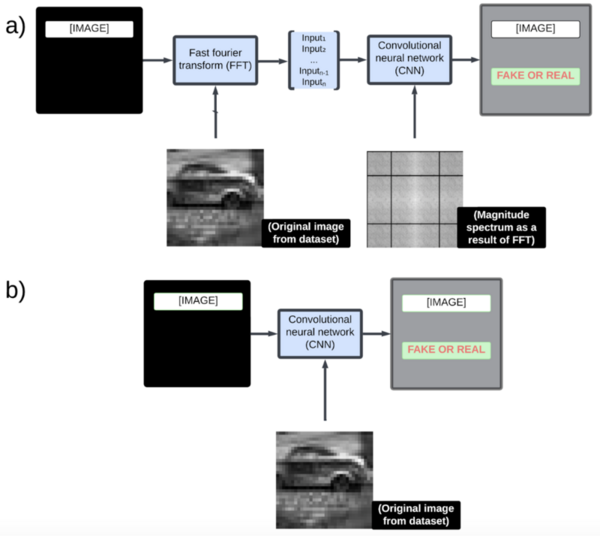

Recent advances in generative AI have made it increasingly hard to distinguish real images from AI-generated ones. Traditional detection models using CNNs or U-net architectures lack precision because they overlook key spatial and frequency domain details. This study introduced a hybrid model combining Convolutional Neural Networks (CNN) with Fast Fourier Transform (FFT) to better capture subtle edge and texture patterns.

Read More...