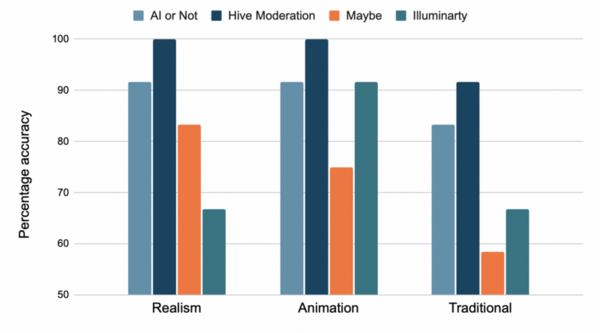

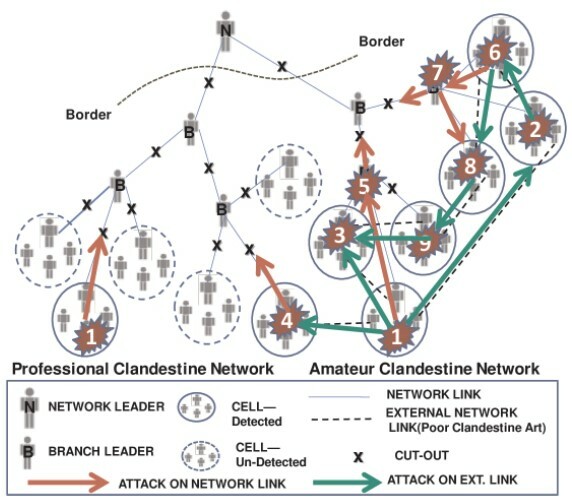

The authors investigate how well AI-detection machine learning models can detect real versus AI-generated art across different art styles.

Read More...Evaluating the effectiveness of machine learning models for detecting AI-generated art

The authors investigate how well AI-detection machine learning models can detect real versus AI-generated art across different art styles.

Read More...More efficient sources of water distribution for agricultural and general usage

Here, the authors investigated alternative methods to irrigate plants based on the their identification that current irrigation systems waste a large amount of fresh water. They compared three different delivery methods for water: conventional sprinkler, underground cloth, and a perforated pipe embedded in the soil. They found the cloth method to save the most water, although plant growth was slightly less in comparison to plants watered with the sprinkler method or pipe method.

Read More...Comparing the effects of electronic cigarette smoke and conventional cigarette smoke on lung cancer viability

Here, recognizing the significant growth of electronic cigarettes in recent years, the authors sought to test a hypothesis that three main components of the liquid solutions used in e-cigarettes might affect lung cancer cell viability. In a study performed by exposing A549 cells, human lung cancer cells, to different types of smoke extracts, the authors found that increasing levels of nicotine resulted in improve lung cancer cell viability up until the toxicity of nicotine resulted in cell death. They conclude that these results suggest that contrary to conventional thought e-cigarettes may be more dangerous than tobacco cigarettes in certain contexts.

Read More...Effects of Photoperiod Alterations on Stress Response in Daphnia magna

Here, seeking to better understand the effects of altered day-night cycles, the authors considered the effects of an altered photoperiod on Daphnia magna. By tracking possible stress responses, including mean heart rate, brood size, and male-to-female ratio they found that a shorter photoperiod resulted in altered mean heart rates and brood size. The authors suggest that based on these observations, it is important to consider the effects of photoperiod alterations and the stress responses of other organisms.

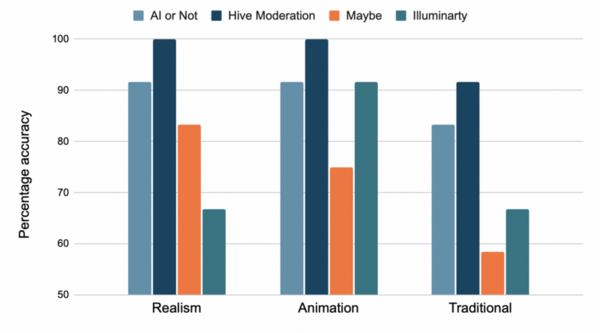

Read More...Thermoelectric Power Generation: Harnessing Solar Thermal Energy to Power an Air Conditioner

The authors test the feasibility of using thermoelectric modules as a power source and as an air conditioner to decrease reliance on fossil fuels. The results showed that, at its peak, their battery generated 27% more power – in watts per square inch – than a solar panel, and the thermoelectric air conditioner operated despite an unsteady input voltage. The battery has incredible potential, especially if its peak power output can be maintained.

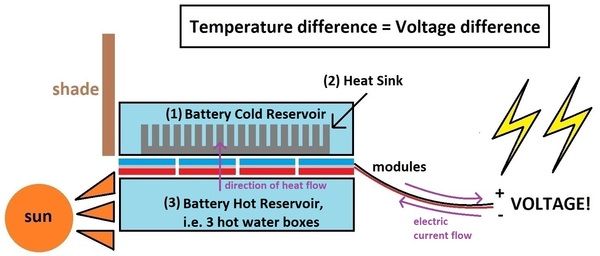

Read More...A Statistical Comparison of the Simultaneous Attack/ Persistent Pursuit Theory Against Current Methods in Counterterrorism Using a Stochastic Model

Though current strategies in counterterrorism are somewhat effective, the Simultaneous Attack/Persistent Pursuit (SAPP) Theory may be superior alternative to current methods. The authors simulated five attack strategies (1 SAPP and 4 non-SAPP), and concluded that the SAPP model was significantly more effective in reducing the final number of terrorist attacks. This demonstrates the comparative advantage of utilizing the SAPP model, which may prove to be critical in future efforts in counterterrorism.

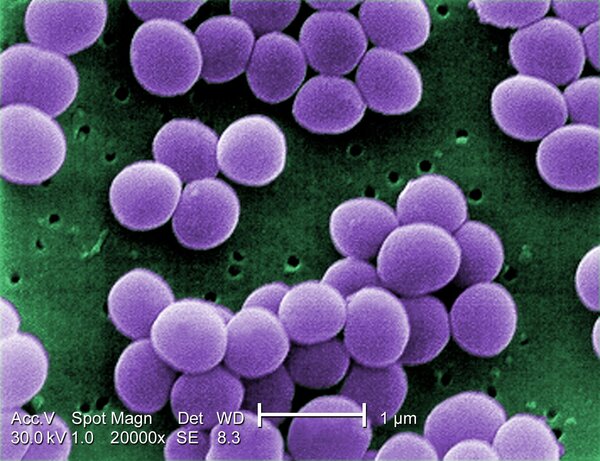

Read More...Improving Wound Healing by Breaking Down Biofilm Formation and Reducing Nosocomial Infections

In a 10-year period in the early 2000’s, hospital-based (nosocomial) infections increased by 123%, and this number is increasing as time goes on. The purpose of this experiment was to use hyaluronic acid, silver nanoparticles, and a bacteriophage cocktail to create a hydrogel that promotes wound healing by increasing cell proliferation while simultaneously disrupting biofilm formation and breaking down Staphylococcus aureus and Pseudomonas aeruginosa, which are two strains of bacteria that attribute to nosocomial infections and are increasing in antibiotic resistance.

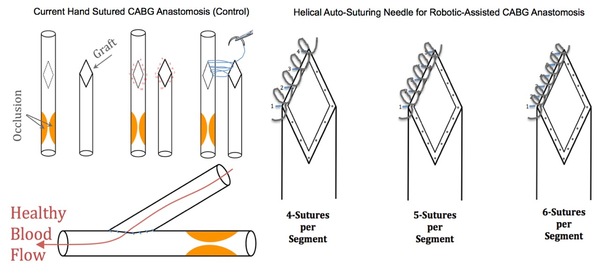

Read More...A Novel Method for Auto-Suturing in Laparoscopic Robotic-Assisted Coronary Artery Bypass Graft (CABG) Anastomosis

Levy & Levy tackle the optimization of the coronary artery bypass graft, a life-saving surgical technique that treats artery blockage due to coronary heart disease. The authors develop a novel auto-suturing method that saves time, allows for an increased number of sutures, and improves graft quality over hand suturing. The authors also show that increasing the number of sutures from four to five with their new method significantly improves graft quality. These promising findings may help improve outcomes for patients undergoing surgery to treat coronary heart disease.

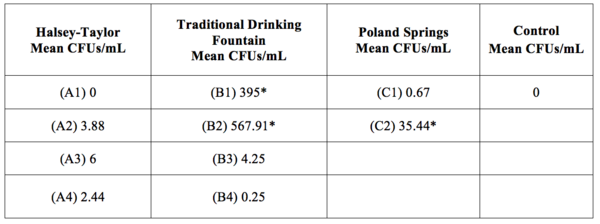

Read More...Bacterial Load Consistency Among Three Independent Water Distribution Systems

Clean drinking water is an essential component to maintaining public health. The authors of this study tested the bacterial load of water from three different disinfection and filtration systems in order to determine which system might be superior.

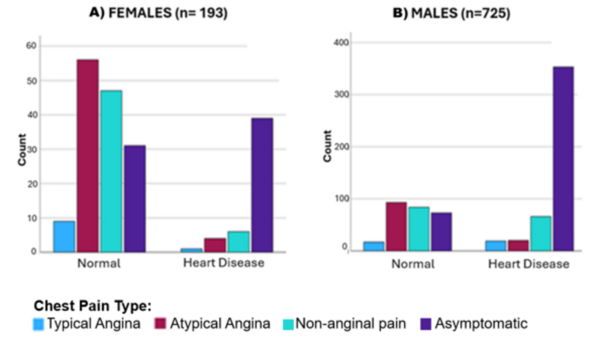

Read More...Predictive modeling of cardiovascular disease using exercise-based electrocardiography

The authors looked factors that could lead to earlier diagnosis of cardiovascular disease thereby improving patient outcomes. They found that advances in imaging and electrocardiography contribute to earlier detection of cardiovascular disease.

Read More...