Evaluating the performance of Q-learning-based AI in auctions

(1) Chadwick High School

https://doi.org/10.59720/25-073

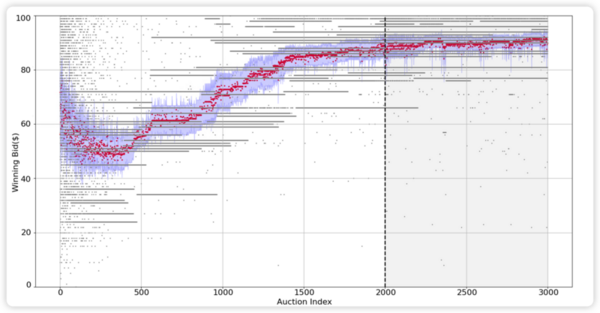

In modern advertising platforms like Google Ads, advertisers set campaign goals and budgets, while artificial intelligence (AI) driven algorithms handle the actual bidding to maximize the advertiser’s benefit. This study investigates whether AI-driven bidding strategies, developed through quality learning (Q-learning), align with classical auction theory. As a reinforcement learning algorithm, Q-learning learns by trial and error, improving actions based on rewards. In first-price auctions where the winner pays the highest bid, economic theory predicts that bidders should engage in bid shading, converging to 50% of their valuation in a two-bidder setting. In contrast, in second-price auctions where the winner pays the 2nd highest bid, bidding on 100% of their valuation is the dominant strategy. We hypothesized that Q-learning agent bidders will learn to bid around 50% of their valuation in first-price auctions and their full valuation in second-price auctions, aligning with theoretical predictions. Our results show that in first-price auctions, Q-learning bidders do not adjust their bids as theory predicts, instead stabilizing at 94.9% of their valuation. In second-price auctions, Q-learning agent bidders exhibit a near-truthful bidding pattern, converging to 98.5% of their valuation. Our analysis suggests that Q-learning does not adapt its strategy based on auction type. These findings highlight the limitations of reinforcement learning in capturing strategic reasoning, suggesting that current AI models struggle to develop auction-specific strategies without explicit guidance.

This article has been tagged with: