Stock price prediction: Long short-term memory vs. Autoformer and time series foundation model

(1) Aragon High School, (2) University of Chicago

https://doi.org/10.59720/24-228

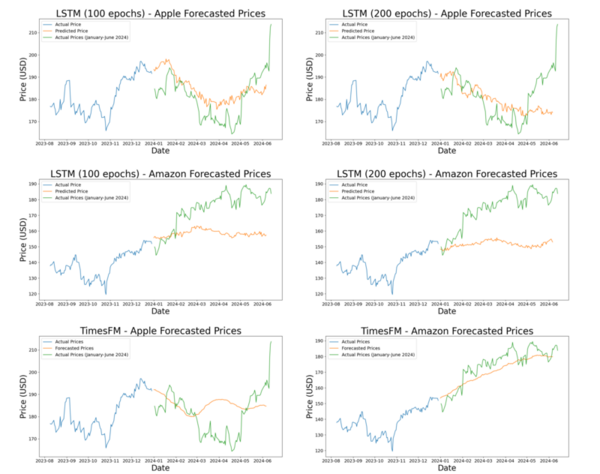

Predicting future closing prices is important for investors because it can help them decide whether they want to invest in a stock and can provide insights into the total profits they might earn. We hypothesized that a long short-term memory (LSTM) model’s architecture, coupled with its exclusive training on historical stock data, would result in better predictions of a stock’s future closing prices compared to newer models that applied transfer learning. To test this, we analyzed the accuracy of a LSTM against two influential transfer learning models for stock price prediction: the Autoformer model and the time series foundation model (TimesFM). Each model received historical stock data for both Apple and Amazon and used it to predict future closing prices over a three-month period. After calculating the mean absolute error for each model, we found that the LSTM performed best when predicting Apple stock prices, and TimesFM performed better when forecasting Amazon stock prices. The Autoformer was the least accurate, possibly because it was trained on traffic data before stock data. By comparing the performance of these models, we have provided valuable insights for future research on stock price prediction models and show that domain-specific training can be superior to transfer learning for predicting stock prices in certain instances.

This article has been tagged with: