Impact of length of audio on music classification with deep learning

(1) Notre Dame High School, (2) AIClube Research Institute

https://doi.org/10.59720/24-214

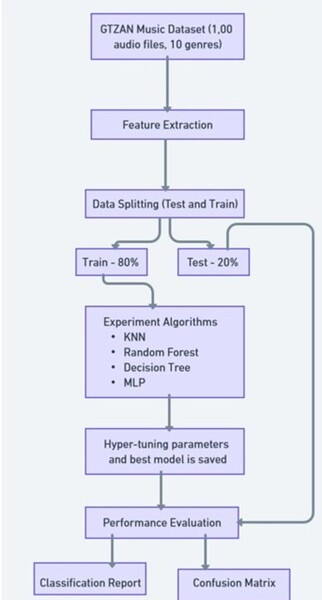

Music genre classification is a challenging task within music information retrieval. Genre plays a crucial role in music recommendation systems, influencing the quality of track suggestions. An automated approach to classify different genres of music would help with creating a high-quality recommendation system. In this study, we proposed an approach to accomplish such automation using machine learning models. The dataset consisted of 1,000 samples and 10 different genre categories. Our approach leveraged digital signal processing for feature extraction from music clips which were subsequently employed for genre classification using machine learning techniques. We hypothesized that a 30-second audio clip enables a higher accuracy for music genre classification using machine learning algorithms compared to a 3-second audio clip. We tested this hypothesis by analyzing clips of 30 seconds and 3 seconds. The experiments were conducted by dividing the dataset into a disjoint set of train and test for evaluating model performance for both cohorts. The highest accuracy for the thirty seconds audio clip dataset was 97% from K-Nearest Neighbors (KNN) and Random Forest, while the highest for the three seconds audio clips was 92% from KNN.

This article has been tagged with: