Mitigating open-set misclassification in a colorectal cancer detecting neural network

(1) Allderdice High School, (2) International Computer Science Institute

https://doi.org/10.59720/23-345

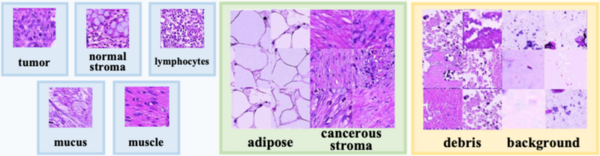

Neural networks typically operate with a closed-world assumption. This implies that they are only designed to classify objects that they were trained on, or in-distribution (ID) objects. However, when deployed in the real world, networks inevitably come across objects that they were not trained on, or out-of-distribution (OOD) objects. In these situations, the closed-world assumption leaves networks ill-prepared, resulting in the misclassification of OOD objects. Neural networks are especially prone to misclassifying open-set objects, a subset of OOD objects that belong to the ID dataset and are therefore very similar in appearance to ID objects. In safety-critical applications, like cancer diagnosis, open-set objects are very common, and their misclassifications can have dire consequences. In this study, we aimed to design a method to mitigate the misclassification of open-set objects. We hypothesized that exposing networks to examples of open-set objects during training via an auxiliary training class would substantially reduce the rate at which open-set objects were misclassified. Our Intra-Dataset Outlier Exposure (IDOE) method outperformed all but one state-of-the-art method in reducing open-set misclassification when evaluated on the MNIST and CIFAR-10 datasets. Additionally, IDOE achieved a test Area Under the Receiver Operating Curve score of 94.8% when tested on the PathMNIST dataset of healthy and cancerous colorectal tissues, demonstrating a high efficacy in medical diagnosis applications. Future work should focus on testing IDOE on more diverse, real-world datasets, such as those used in autonomous vehicles, to better evaluate its applicability in safety-critical environments, where the misclassification of unlabeled open-set objects is costly.

This article has been tagged with: