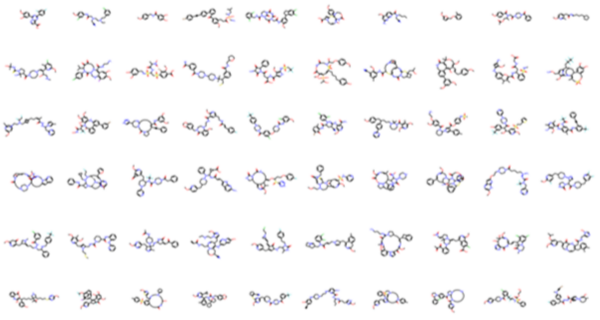

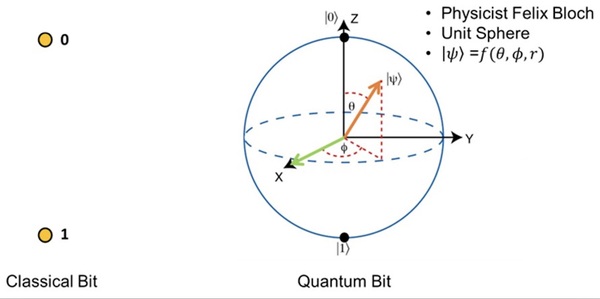

Current drug discovery processes can cost billions of dollars and usually take five to ten years. People have been researching and implementing various computational approaches to search for molecules and compounds from the chemical space, which can be on the order of 1060 molecules. One solution involves deep generative models, which are artificial intelligence models that learn from nonlinear data by modeling the probability distribution of chemical structures and creating similar data points from the trends it identifies. Aiming for faster runtime and greater robustness when analyzing high-dimensional data, we designed and implemented a Hybrid Quantum-Classical Generative Adversarial Network (QGAN) to synthesize molecules.

Read More...