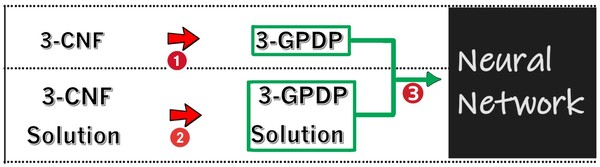

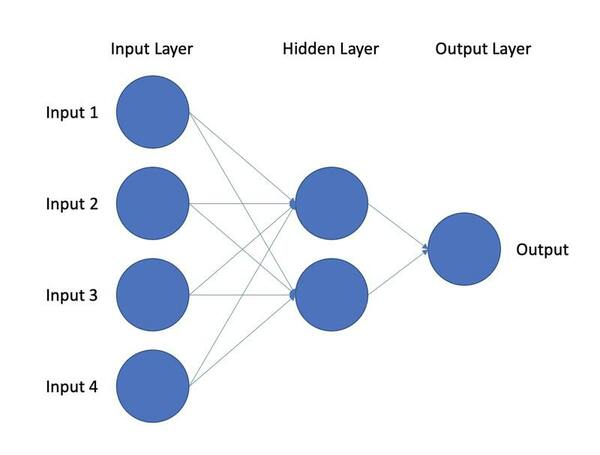

In this study, the authors tested the ability and accuracy of a neural net to identify patterns in complex number matrices.

Read More...Solving a new NP-Complete problem that resembles image pattern recognition using deep learning

In this study, the authors tested the ability and accuracy of a neural net to identify patterns in complex number matrices.

Read More...The most efficient position of magnets

Here, the authors investigated the most efficient way to position magnets to hold the most pieces of paper on the surface of a refrigerator. They used a regression model along with an artificial neural network to identify the most efficient positions of four magnets to be at the vertices of a rectangle.

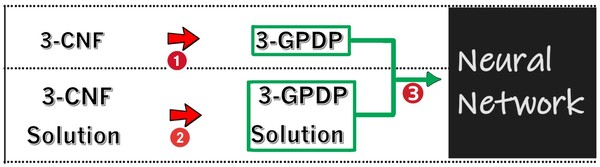

Read More...Collaboration beats heterogeneity: Improving federated learning-based waste classification

Based on the success of deep learning, recent works have attempted to develop a waste classification model using deep neural networks. This work presents federated learning (FL) for a solution, as it allows participants to aid in training the model using their own data. Results showed that with less clients, having a higher participation ratio resulted in less accuracy degradation by the data heterogeneity.

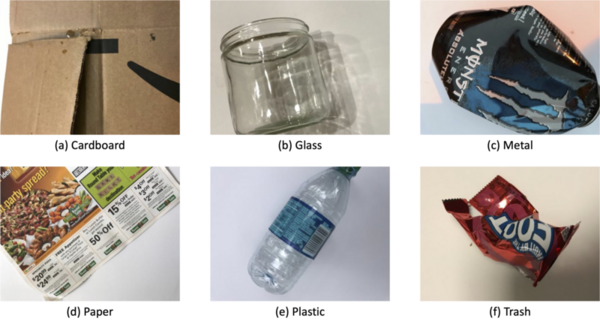

Read More...Uncovering mirror neurons’ molecular identity by single cell transcriptomics and microarray analysis

In this study, the authors use bioinformatic approaches to characterize the mirror neurons, which are active when performing and seeing certain actions. They also investigated whether mirror neuron impairment was connected to neural degenerative diseases and psychiatric disorders.

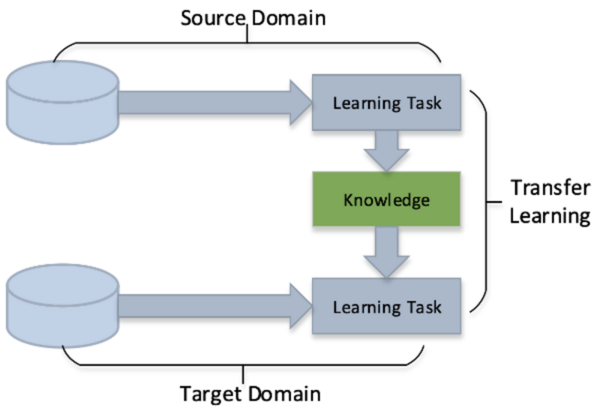

Read More...Transfer learning and data augmentation in osteosarcoma cancer detection

Osteosarcoma is a type of bone cancer that affects young adults and children. Early diagnosis of osteosarcoma is crucial to successful treatment. The current methods of diagnosis, which include imaging tests and biopsy, are time consuming and prone to human error. Hence, we used deep learning to extract patterns and detect osteosarcoma from histological images. We hypothesized that the combination of two different technologies (transfer learning and data augmentation) would improve the efficacy of osteosarcoma detection in histological images. The dataset used for the study consisted of histological images for osteosarcoma and was quite imbalanced as it contained very few images with tumors. Since transfer learning uses existing knowledge for the purpose of classification and detection, we hypothesized it would be proficient on such an imbalanced dataset. To further improve our learning, we used data augmentation to include variations in the dataset. We further evaluated the efficacy of different convolutional neural network models on this task. We obtained an accuracy of 91.18% using the transfer learning model MobileNetV2 as the base model with various geometric transformations, outperforming the state-of-the-art convolutional neural network based approach.

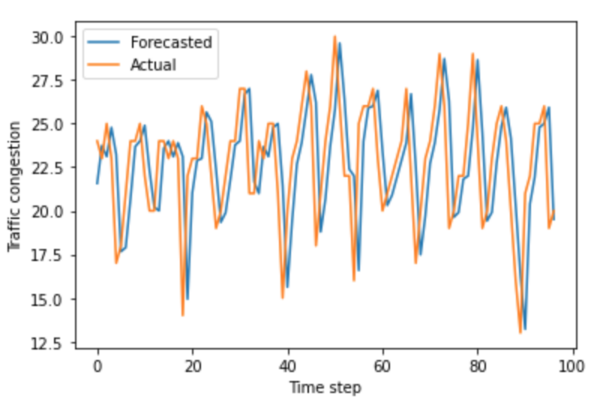

Read More...Differential privacy in machine learning for traffic forecasting

In this paper, we measured the privacy budgets and utilities of different differentially private mechanisms combined with different machine learning models that forecast traffic congestion at future timestamps. We expected the ANNs combined with the Staircase mechanism to perform the best with every value in the privacy budget range, especially with the medium high values of the privacy budget. In this study, we used the Autoregressive Integrated Moving Average (ARIMA) and neural network models to forecast and then added differentially private Laplacian, Gaussian, and Staircase noise to our datasets. We tested two real traffic congestion datasets, experimented with the different models, and examined their utility for different privacy budgets. We found that a favorable combination for this application was neural networks with the Staircase mechanism. Our findings identify the optimal models when dealing with tricky time series forecasting and can be used in non-traffic applications like disease tracking and population growth.

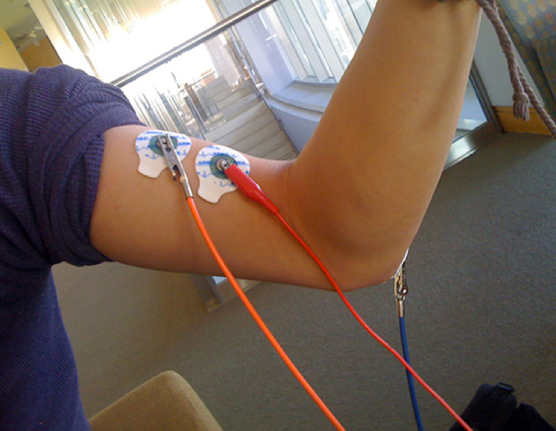

Read More...Starts and Stops of Rhythmic and Discrete Movements: Modulation in the Excitability of the Corticomotor Tract During Transition to a Different Type of Movement

Control of voluntary and involuntary movements is one of the most important aspects of human neurological function, but the mechanisms of motor control are not completely understood. In this study, the authors use transcranial magnetic stimulation (TMS) to stimulate a portion of the motor cortex while subjects performed either discrete (e.g. throwing) or rhythmic (e.g. walking) movements. By recording electrical activity in the muscles during this process, the authors showed that motor evoked potentials (MEPs) measured in the muscles during TMS stimulation are larger in amplitude for discrete movements than for rhythmic movements. Interestingly, they also found that MEPs during transitions between rhythmic and discrete movements were nearly identical and larger in amplitude than those recorded during either rhythmic or discrete movements. This research provides important insights into the mechanisms of neurological control of movement and will serve as the foundation for future studies to learn more about temporal variability in neural activity during different movement types.

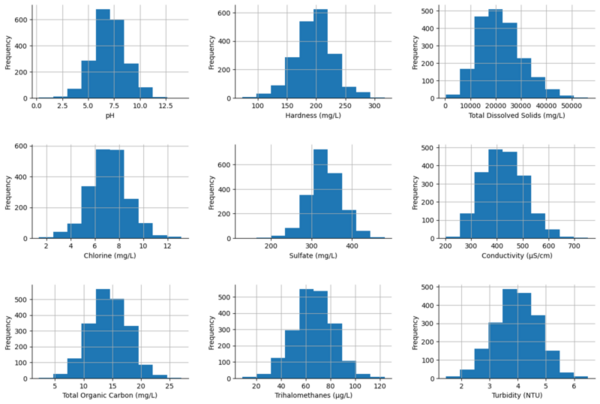

Read More...Comparative study of machine learning models for water potability prediction

The global issue of water quality has led to the use of machine learning models, like ANN and SVM, to predict water potability. However, these models can be complex and resource-intensive. This research aimed to find a simpler, more efficient model for water quality prediction.

Read More...Model selection and optimization for poverty prediction on household data from Cambodia

Here the authors sought to use three machine learning models to predict poverty levels in Cambodia based on available household data. They found teat multilayer perceptron outperformed the other models, with an accuracy of 87 %. They suggest that data-driven approaches such as these could be used more effectively target and alleviate poverty.

Read More...Post-Traumatic Stress Disorder (PTSD) biomarker identification using a deep learning model

In this study, a deep learning model is used to classify post-traumatic stress disorder patients through novel markers to assist in finding candidate biomarkers for the disorder.

Read More...