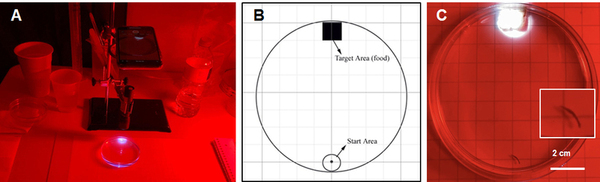

This study explored whether planaria, known for their regenerative abilities, can retain learned memories after regeneration and how stressors like alcohol affect memory.

Read More...Stress-induced genetic memory inheritance and retention in Planarian biological model

This study explored whether planaria, known for their regenerative abilities, can retain learned memories after regeneration and how stressors like alcohol affect memory.

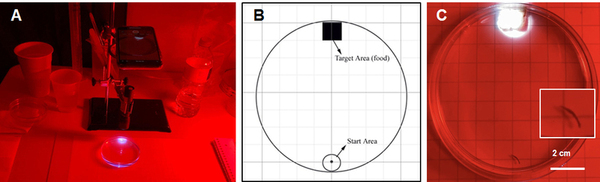

Read More...Gradient boosting with temporal feature extraction for modeling keystroke log data

Although there has been great progress in the field of Natural language processing (NLP) over the last few years, particularly with the development of attention-based models, less research has contributed towards modeling keystroke log data. State of the art methods handle textual data directly and while this has produced excellent results, the time complexity and resource usage are quite high for such methods. Additionally, these methods fail to incorporate the actual writing process when assessing text and instead solely focus on the content. Therefore, we proposed a framework for modeling textual data using keystroke-based features. Such methods pay attention to how a document or response was written, rather than the final text that was produced. These features are vastly different from the kind of features extracted from raw text but reveal information that is otherwise hidden. We hypothesized that pairing efficient machine learning techniques with keystroke log information should produce results comparable to transformer techniques, models which pay more or less attention to the different components of a text sequence in a far quicker time. Transformer-based methods dominate the field of NLP currently due to the strong understanding they display of natural language. We showed that models trained on keystroke log data are capable of effectively evaluating the quality of writing and do it in a significantly shorter amount of time compared to traditional methods. This is significant as it provides a necessary fast and cheap alternative to increasingly larger and slower LLMs.

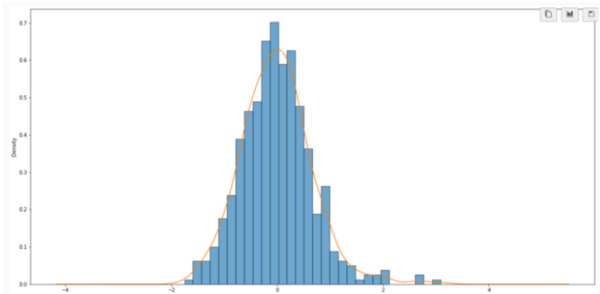

Read More...Machine learning for retinopathy prediction: Unveiling the importance of age and HbA1c with XGBoost

The purpose of our study was to examine the correlation of glycosylated hemoglobin (HbA1c), blood pressure (BP) readings, and lipid levels with retinopathy. Our main hypothesis was that poor glycemic control, as evident by high HbA1c levels, high blood pressure, and abnormal lipid levels, causes an increased risk of retinopathy. We identified the top two features that were most important to the model as age and HbA1c. This indicates that older patients with poor glycemic control are more likely to show presence of retinopathy.

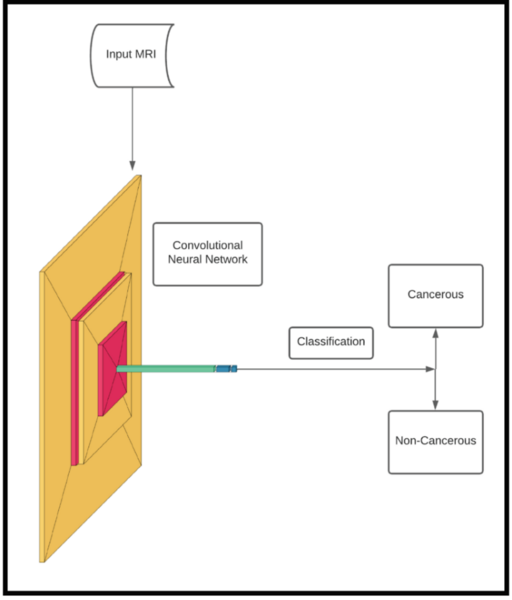

Read More...Predicting the Instance of Breast Cancer within Patients using a Convolutional Neural Network

Using a convolution neural network, these authors show machine learning can clinically diagnose breast cancer with high accuracy.

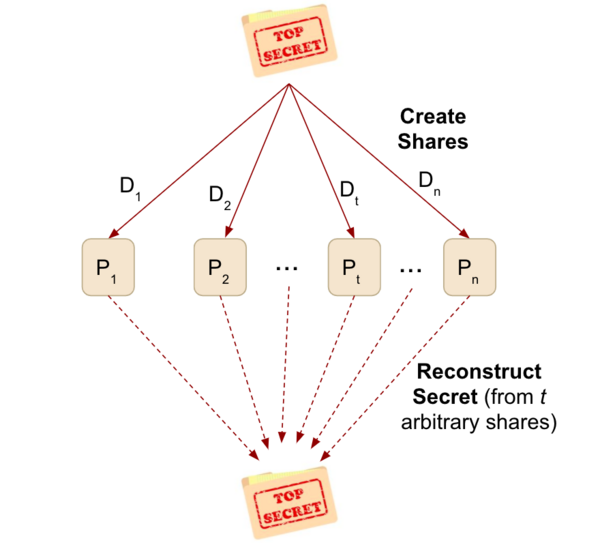

Read More...LawCrypt: Secret Sharing for Attorney-Client Data in a Multi-Provider Cloud Architecture

In this study, the authors develop an architecture to implement in a cloud-based database used by law firms to ensure confidentiality, availability, and integrity of attorney documents while maintaining greater efficiency than traditional encryption algorithms. They assessed whether the architecture satisfies necessary criteria and tested the overall file sizes the architecture could process. The authors found that their system was able to handle larger file sizes and fit engineering criteria. This study presents a valuable new tool that can be used to ensure law firms have adequate security as they shift to using cloud-based storage systems for their files.

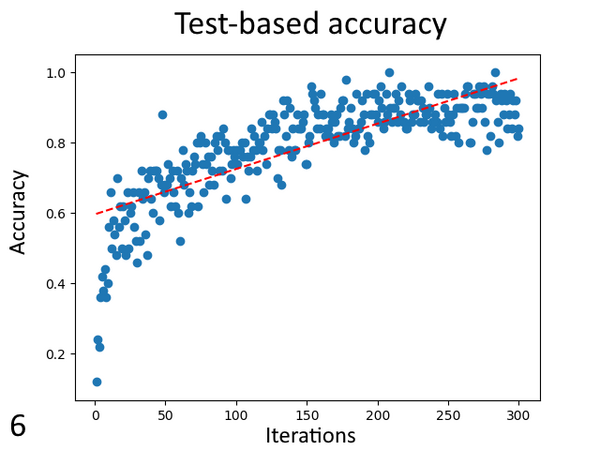

Read More...The Effect of Varying Training on Neural Network Weights and Visualizations

Neural networks are used throughout modern society to solve many problems commonly thought of as impossible for computers. Fountain and Rasmus designed a convolutional neural network and ran it with varying levels of training to see if consistent, accurate, and precise changes or patterns could be observed. They found that training introduced and strengthened patterns in the weights and visualizations, the patterns observed may not be consistent between all neural networks.

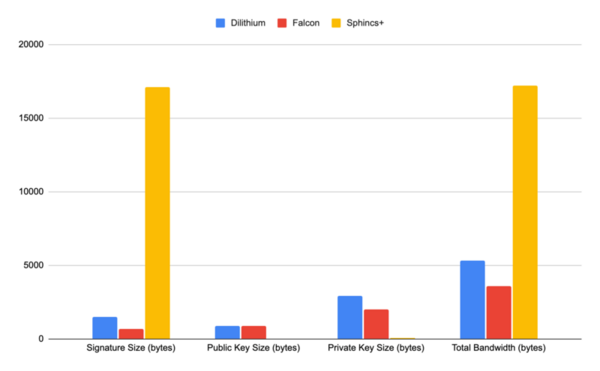

Read More...A meta-analysis on NIST post-quantum cryptographic primitive finalists

The advent of quantum computing will pose a substantial threat to the security of classical cryptographic methods, which could become vulnerable to quantum-based attacks. In response to this impending challenge, the field of post-quantum cryptography has emerged, aiming to develop algorithms that can withstand the computational power of quantum computers. This study addressed the pressing concern of classical cryptographic methods becoming vulnerable to quantum-based attacks due to the rise of quantum computing. The emergence of post-quantum cryptography has led to the development of new resistant algorithms. Our research focused on four quantum-resistant algorithms endorsed by America’s National Institute of Standards and Technology (NIST) in 2022: CRYSTALS-Kyber, CRYSTALS-Dilithium, FALCON, and SPHINCS+. This study evaluated the security, performance, and comparative attributes of the four algorithms, considering factors such as key size, encryption/decryption speed, and complexity. Comparative analyses against each other and existing quantum-resistant algorithms provided insights into the strengths and weaknesses of each program. This research explored potential applications and future directions in the realm of quantum-resistant cryptography. Our findings concluded that the NIST algorithms were substantially more effective and efficient compared to classical cryptographic algorithms. Ultimately, this work underscored the need to adapt cryptographic techniques in the face of advancing quantum computing capabilities, offering valuable insights for researchers and practitioners in the field. Implementing NIST-endorsed quantum-resistant algorithms substantially reduced the vulnerability of cryptographic systems to quantum-based attacks compared to classical cryptographic methods.

Read More...Part of speech distributions for Grimm versus artificially generated fairy tales

Here, the authors wanted to explore mathematical paradoxes in which there are multiple contradictory interpretations or analyses for a problem. They used ChatGPT to generate a novel dataset of fairy tales. They found statistical differences between the artificially generated text and human produced text based on the distribution of parts of speech elements.

Read More...The Effect of Interactive Electronics Use on Psychological Well Being and Interpersonal Relationship Quality in Adults

In recent years, usage of interactive electronic devices such as computers, smartphones, and tablets has increased dramatically. Many studies have examined the potential adverse effects of excessive usage of such devices on children and adolescents, but the effects on adults are not well understood. In this study, the authors examined the relationship between adult usage of interactive electronic devices and a variety of clinical measures of psychological well-being. They found that according to some metrics, higher usage of interactive electronic devices is associated with several adverse psychological outcomes, suggesting a need for more careful consideration of such usage patterns in clinical settings.

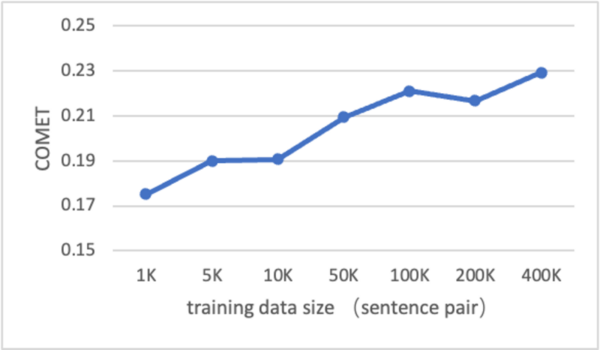

Read More...Large Language Models are Good Translators

Machine translation remains a challenging area in artificial intelligence, with neural machine translation (NMT) making significant strides over the past decade but still facing hurdles, particularly in translation quality due to the reliance on expensive bilingual training data. This study explores whether large language models (LLMs), like GPT-4, can be effectively adapted for translation tasks and outperform traditional NMT systems.

Read More...