Recurrent neural networks (RNNs) are useful for text generation since they can generate outputs in the context of previous ones. Baroque music and language are similar, as every word or note exists in context with others, and they both follow strict rules. The authors hypothesized that if we represent music in a text format, an RNN designed to generate language could train on it and create music structurally similar to Bach’s. They found that the music generated by our RNN shared a similar structure with Bach’s music in the input dataset, while Bachbot’s outputs are significantly different from this experiment’s outputs and thus are less similar to Bach’s repertoire compared to our algorithm.

Read More...Browse Articles

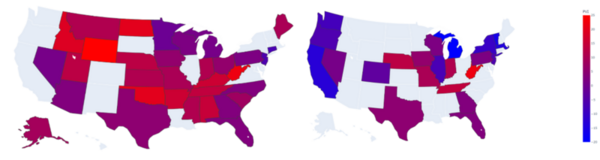

Who controls U.S. politics? An analysis of major political endorsements in U.S. midterm elections

The authors analyze political endorsement patterns and impacts from the 2018 and 2020 midterm elections and find that such endorsements may be predictable based on the ideological and demographic factors of the endorser.

Read More...